Foundations of Cryptography Vol I

Chapter I

Solutions to exercises in Chapter 1 of (Goldreich, 2004)

Let X be a random variable such that \mathbb{E}(X) = \mu and X \leq 2\mu. Give an upper bound on Pr[X \leq \frac{\mu}{2}].

A little transformation of random variables to get a non negative RV. Define Y = 2\mu - X \geq 0 \begin{align*} P[X \leq \frac{\mu}{2}] &= P[2\mu - Y \leq \frac{\mu}{2}] \\ &= P[Y \geq 2\mu - \frac{\mu}{2}] \\ &\leq \frac{E[Y]}{1.5\mu} (\#eq:markov1) \\ &= \frac{2}{3} \end{align*}

@ref(eq:markov1) By Markov

Let 0 < \epsilon and \delta < 1, and let Y be a random variable ranging in the interval [0,1] such that \mathbb{E}(Y) = \delta + \epsilon. Give a lower bound on Pr[Y \geq \delta + 2\epsilon ].

Another auxilliary transformation is needed but we will use the proof style of markov as recommended in the guide.

First note if \delta + \frac{\epsilon}{2} > 1, then P[Y \geq \delta + \frac{\epsilon}{2}] = 0 since Y \in [0,1]. So we can assume \delta + \frac{\epsilon}{2} \leq 1.

Similarly, if \delta + \frac{\epsilon}{2} < 0, then P[Y \geq \delta + \frac{\epsilon}{2}] = 1

\begin{align*} E[Y] &= \int_{0}^1 yf(y)dy \\ &= \int_{0}^{\delta + \frac{\epsilon}{2}} yf(y)dy + \int_{\delta + \frac{\epsilon}{2}}^1 yf(y)dy \\ &\geq \int_{\delta + \frac{\epsilon}{2}}^1 yf(y)dy \\ &\geq \delta + \frac{\epsilon}{2}\int_{\delta + \frac{\epsilon}{2}}^1 f(y)dy \\ \end{align*}

Therefore, P[Y \geq \delta + \frac{\epsilon}{2}] \leq \frac{\epsilon + \delta}{\delta + \frac{\epsilon}{2}}.

On input a graph G = (V, E) and two vertices, s and t, we take a random walk of length O(|V | · |E|), starting at vertex s, and test at each step whether or not vertex t is encountered. If vertex t is ever encountered, then the algorithm will accept; otherwise, it will reject. By a random walk we mean that at each step we uniformly select one of the edges incident at the current vertex and traverse this edge to the other endpoint. *show that if s is connected to t in the graph G, then, with probability at least \frac{2}{3}, vertex t will be encountered in a random walk starting at s.

I have not yet been able to solve this problem. TODO with Daniel.

This comes quite directly from Hoeffding’s inequality. A samples s_1, s_2, \dots, s_{p(n)} where s_i \sim Uniform(\{0, 1\}^n) and computes f(s_1), \dots, f(s_{p(n)}) which are identical i.i.d random variables each bounded in [0,1]. Let A_{p(n)} = f(s_1) + \dots + f(s_{p(n)})

By Hoeffding:

\begin{align*} P\Big[ | E[A_{p(n)}] - A_{p(n)} | \geq 1\Big] \leq 2^{-n} \end{align*}

Log bases can be easily converted from one to the other, in this document all bases are \log_2.

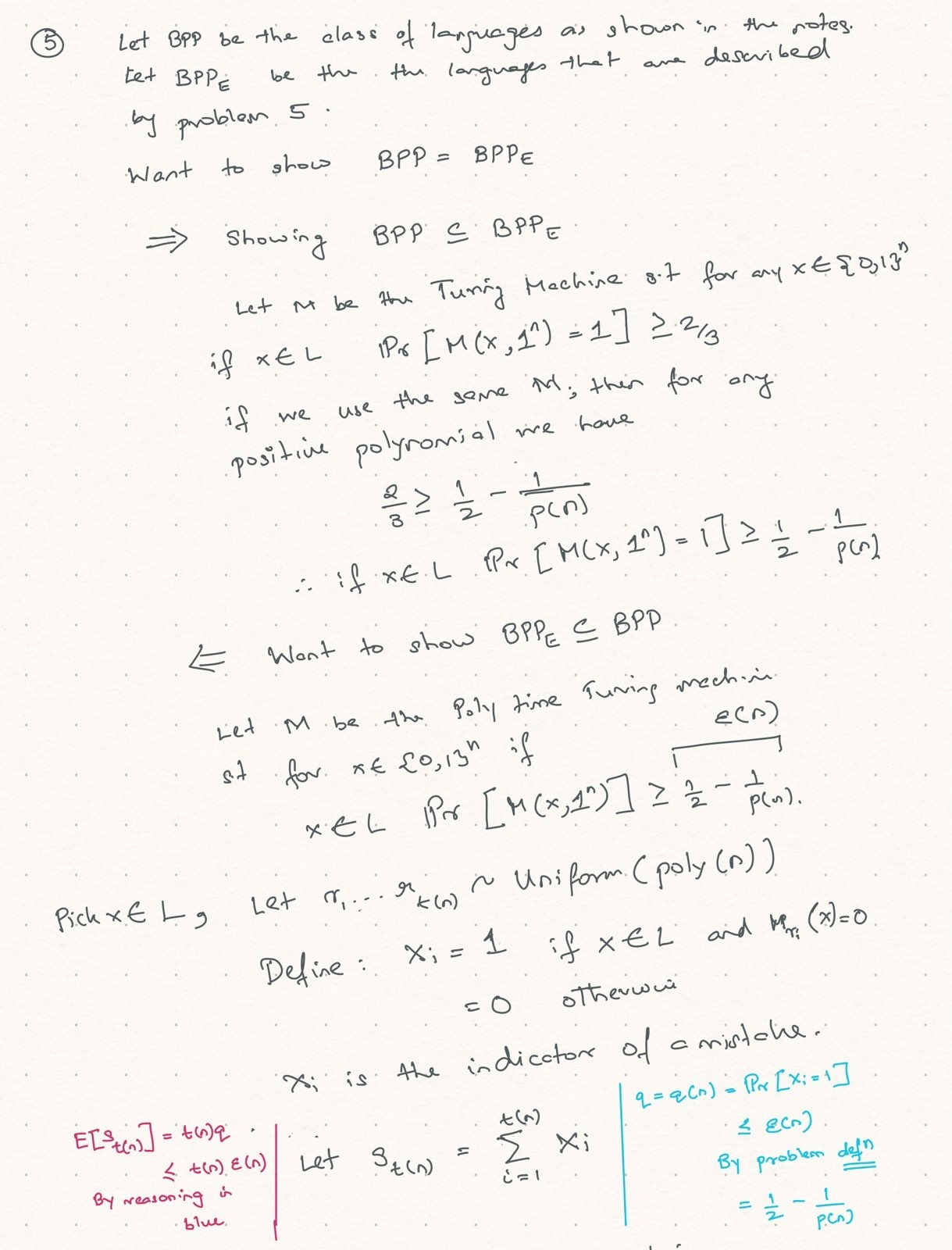

Equivalent definition of BPP. Part 1: Prove that Definition of \mathcal{BPP} is robust when \frac{2}{3} is replaced by \frac{1}{2} + \frac{1}{p(|x|)} for every positive polynomial p(·). Namely, show that L \in \mathcal{BPP} if there exists a polynomial p(·) and a probabilistic polynomial-time machine M such that

- for every x \in L it holds that P[M(x)=1] \geq \frac{1}{2} + \frac{1}{p(|x|)}

- Non false positiveness: for every x \notin L it holds that P[M(x)=0] \geq \frac{1}{2} + \frac{1}{p(|x|)}

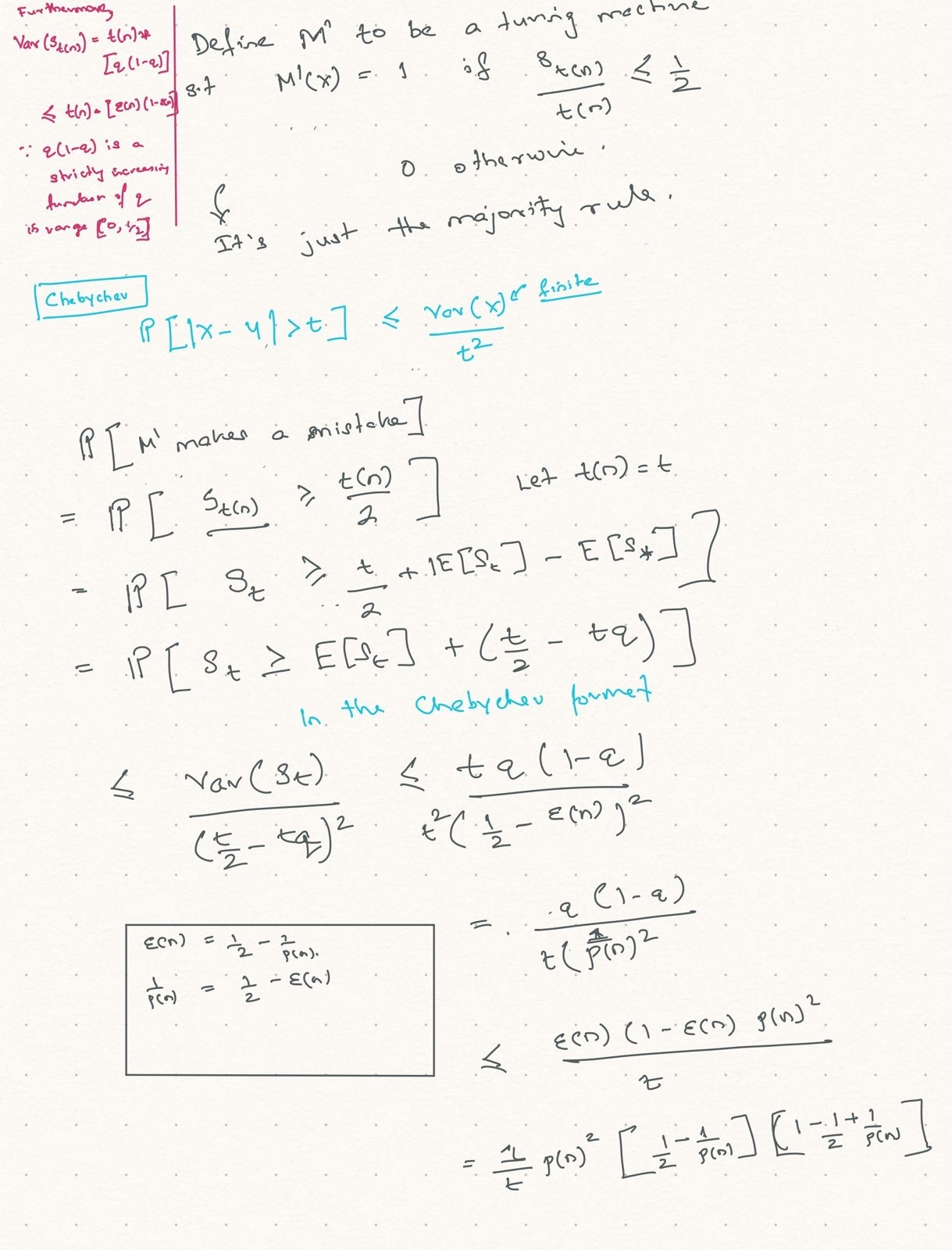

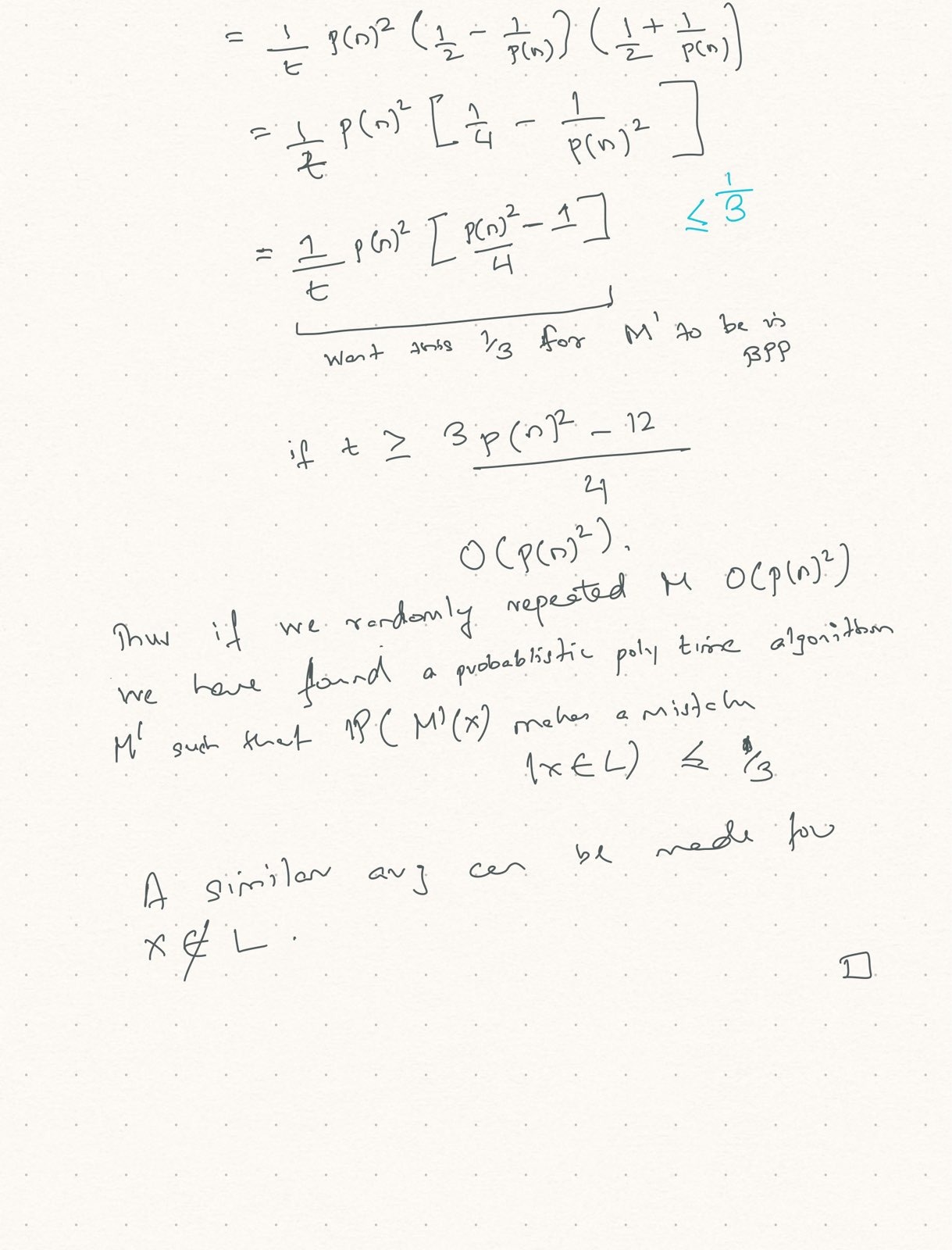

Same idea as above but using Chebychev’s instead of Chernoff. Did not want to write latex so uploading picture of solution.

Now do the same again: but replace \frac{2}{3} by 1 + \frac{1}{2^{p(|x|)}}

Assume L \in \mathsf{BPP}, this implies that there exists some probabilistic polynomial time turing macine M such that

P\Big[ M(x) = 1 | x \in L \Big] \geq 2/3.

Define r_1, \dots, r_{t(n)} as randomv variables sampled uniformly at random from U^n. For the sake convenience we will write t(n) = t. Define X_i = 1 if M_{r_i}(x) = I[x \in L]. Simply put if X_i=1, then M_{r} predicted correctly.

Let S_t = X_1 + \dots + X_{r_t}. Define a turing machine \hat{M} such that \hat{M}(x) = 1 if S_t \geq \frac{t}{2}. In other words, \hat{M} selects the majority from the predictions of the random machines. Note each X_i is a bernoulli random variable with P\Big[X_i = 1 | x\in L\Big] = p \geq \frac{2}{3}

Reminder, the chernoff bound, for a sum of t bernoulli variables with mean q is

\begin{align*} P[S_t \leq (1 - \delta)qt] \leq \text{exp}\{- \frac{\delta^2p}{2}\} \end{align*}

Now \hat{M} makes a mistake if x \in L but S_t \leq t/2 \begin{align*} P[\hat{M} \text{ makes a mistake}] &= P\Big[ S_t \leq \frac{t}{2} \text{ | } x \in L \Big] \\ &= P\Big[ S_t \leq (1 - (1 - \frac{1}{2p}))pt \text{ | } x \in L \Big] \\ &\leq \mathsf{exp}\left( \{\right)- t\frac{(p - 1/2)^2}{2p}\} \\ &= \mathsf{exp}\left( \{\right)- f(p)\frac{t}{2}\} (\#eq:inc) \end{align*}

@ref(eq:inc) f(p) = \frac{(p - 1/2)^2}{p} is an increasing function in (1/2, \infty), therefore e^{-f(p)} is decreasing. We have p \geq 2/3, therefore e^{-f(p)} \leq e^{-f(2/3)} = e^{-\frac{1}{24}}. Using this,

\begin{align*} P[\hat{M} \text{ makes a mistake}] &= P\Big[ S_t \leq \frac{t}{2} \text{ | } x \in L \Big] \\ &= \mathsf{exp}\left( \{\right)- f(p)\frac{t}{2}\} \\ &= \mathsf{exp}\left( \{\right)- \frac{1}{24}\frac{t}{2}\} \\ &\leq 2^{-p(n)} \end{align*}

If t(n) \geq (48 \ln(2))p(n) \approx O(n), then \hat{M} is poly turing machine that does the job

The other side is trivial, in that, pick p(n) \geq -\ln(1/3) and assume P\Big[ M(x) = 1 | x \in L \Big] \geq 1 - 2^{-p(n)}. Then P\Big[ M(x) = 1 | x \in L \Big] \geq \frac{2}{3}.